Some users ask us if it's possible to run on an external server or, in this case, on WSL (Windows Subsystem for Linux) and connect it to use in CodeGPT. First, I must say that the key to everything is ensuring the service is accessible from localhost. However, there are many system requirements to guarantee it.

This guide will help you set up an Ollama service in WSL and connect it with the CodeGPT extension in VSCode. Make sure to follow each step carefully to ensure the service is accessible from localhost.

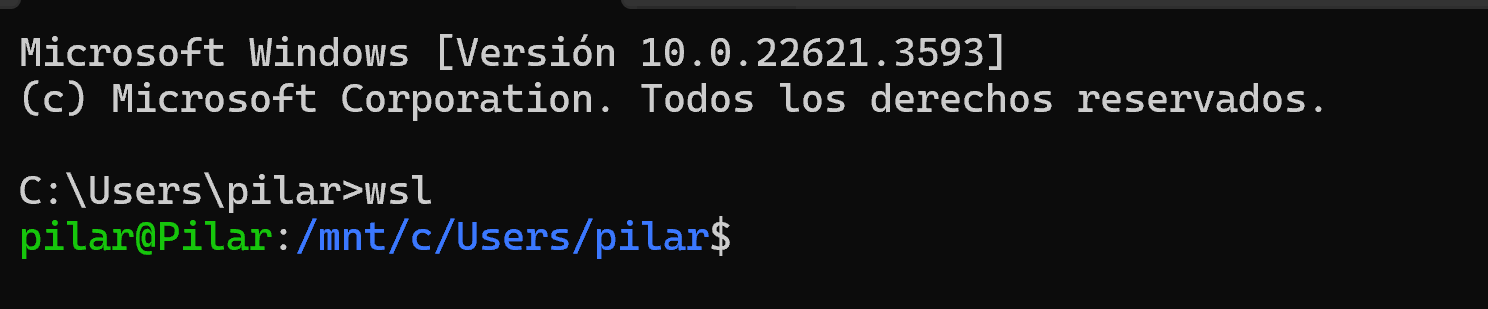

Step 1: Install WSL

- Open Command Prompt as Administrator: Right-click the Start button and select "Command Prompt (Admin)" or "Windows PowerShell (Admin)."

- Install WSL: Run the following command:

wsl --install - Restart your computer.

- Launch Ubuntu: From the desktop or by typing

wslin the Command Prompt. - For more details, check the official Microsoft guide.

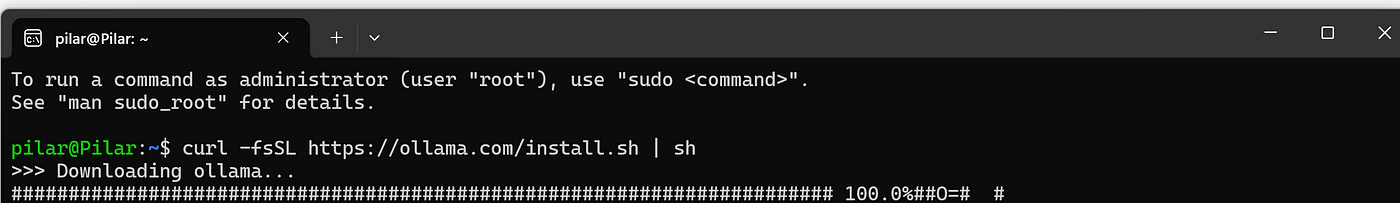

Step 2: Install Ollama

- Open Ubuntu in WSL.

- Install Ollama: Run the following command to download and install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

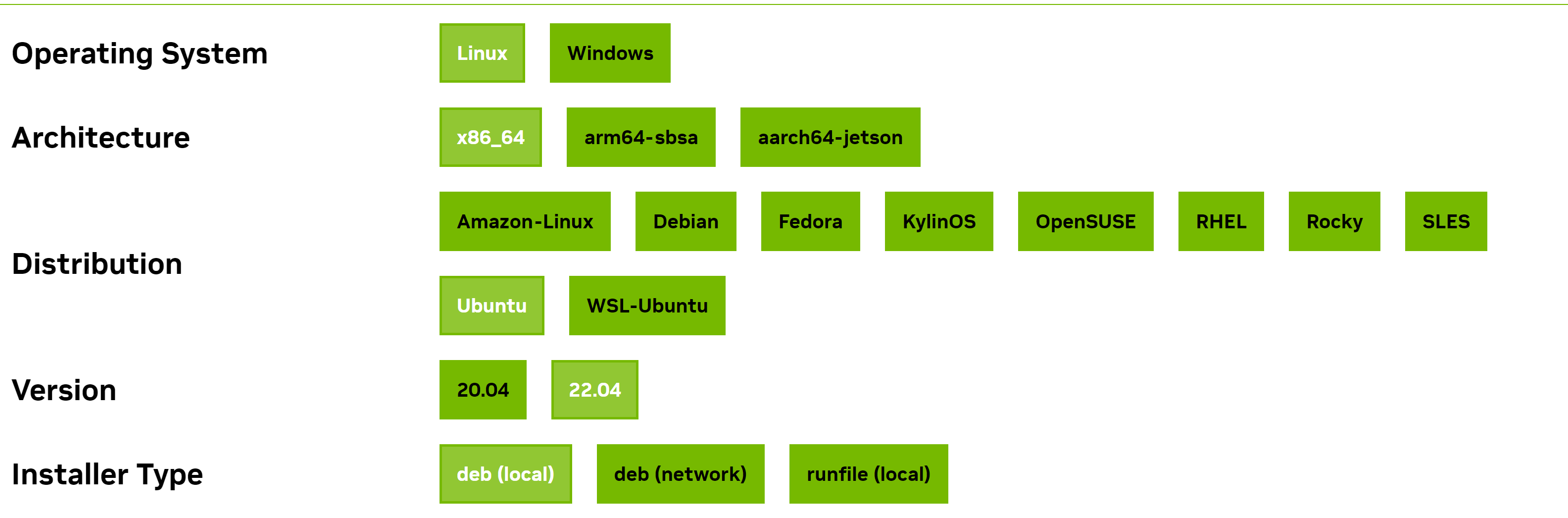

3. Install GPU requirements (if necessary):

- Check the characteristics of your Linux operating system installed in WSL.

- For Ubuntu 22.04, follow the CUDA Toolkit 12.1 Downloads page instructions.

Check it with the "lsb_release -a" command

Check it with the "lsb_release -a" command

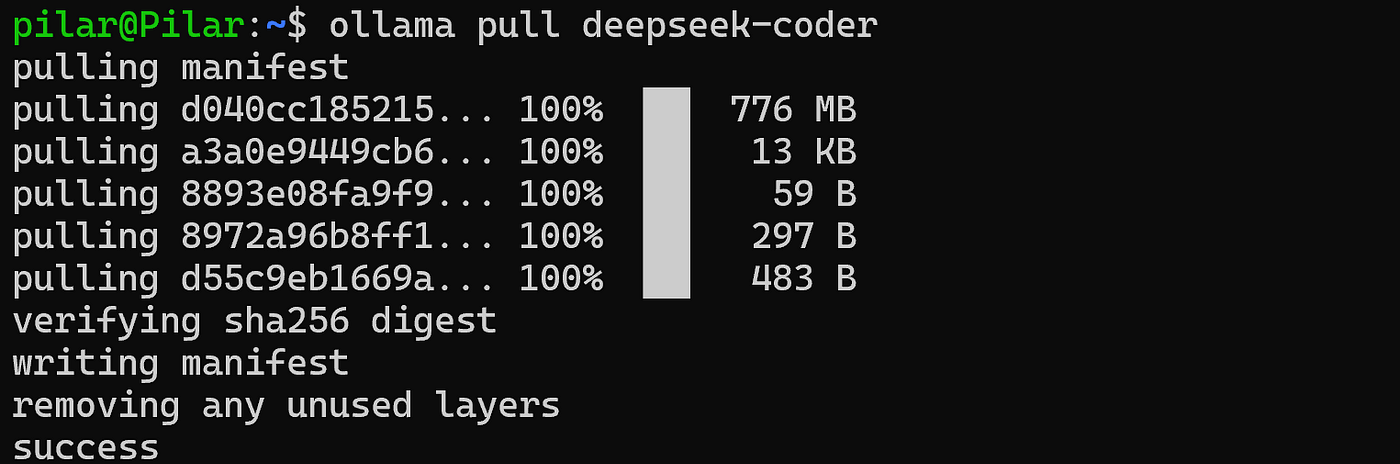

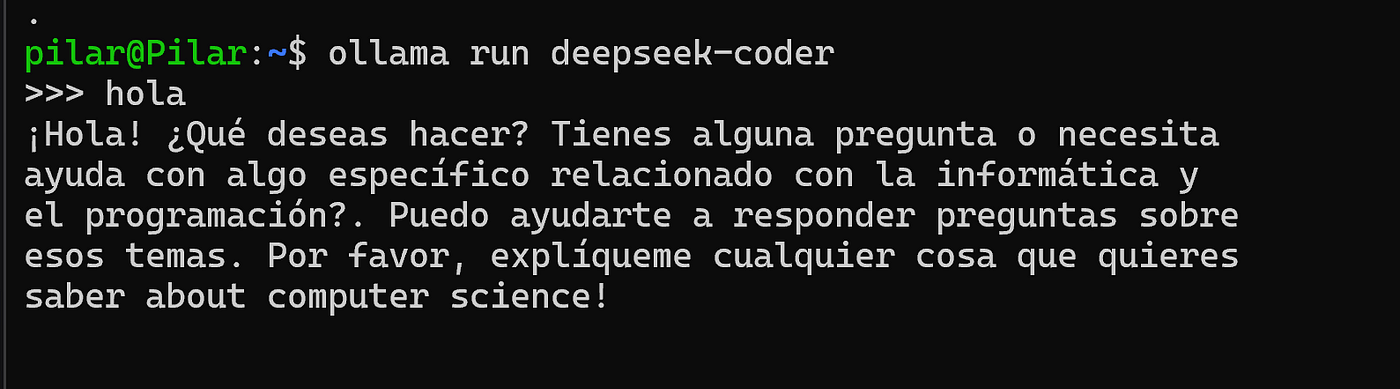

Step 3: Install the Model

- I chose the DeepSeek-Coder model; there are many others. Run the command "Ollama pull MODEL_NAME" to install the required model.

- Test the model: Ensure that the model responds correctly to requests.

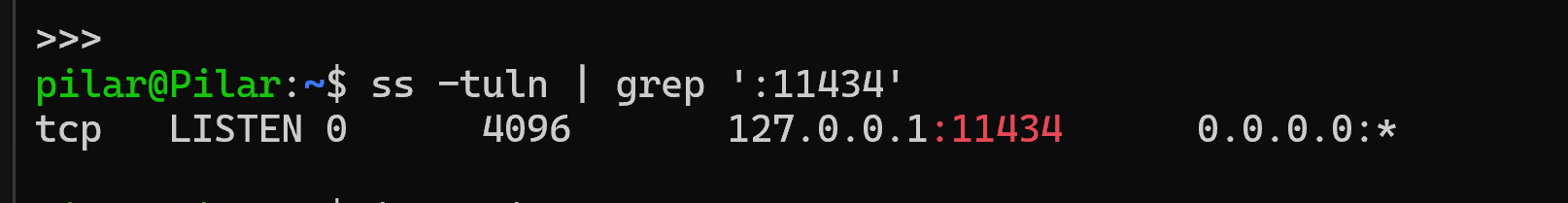

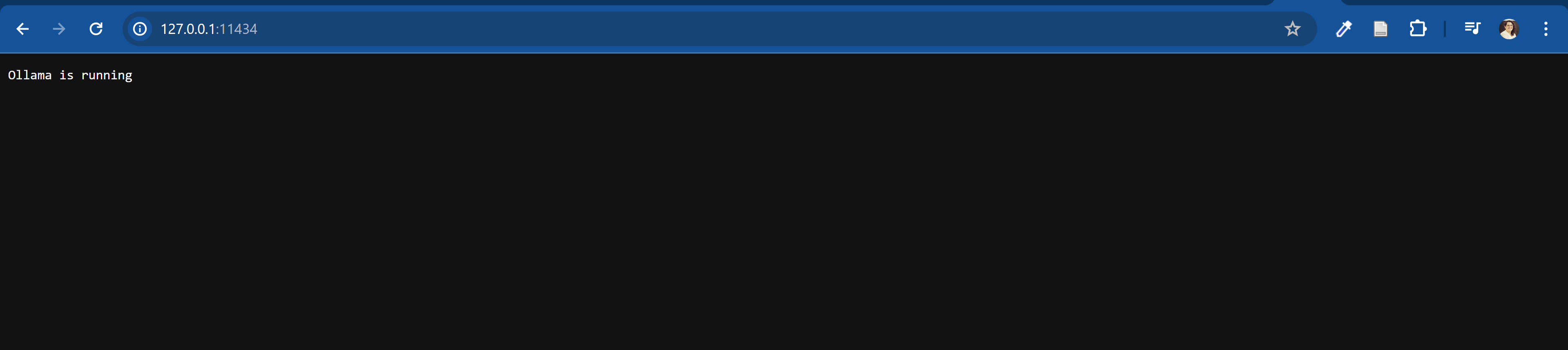

Step 4: Verify the Service is Running on localhost

- Ensure that Ollama is running on an IP address

127.0.0.1and port11434.

2. Configure port forwarding in Windows. Open Command Prompt as Administrator and run: netsh interface portproxy add v4tov4 listenport=80 listenaddress=0.0.0.0 connectport=11434 connectaddress=127.0.0.1

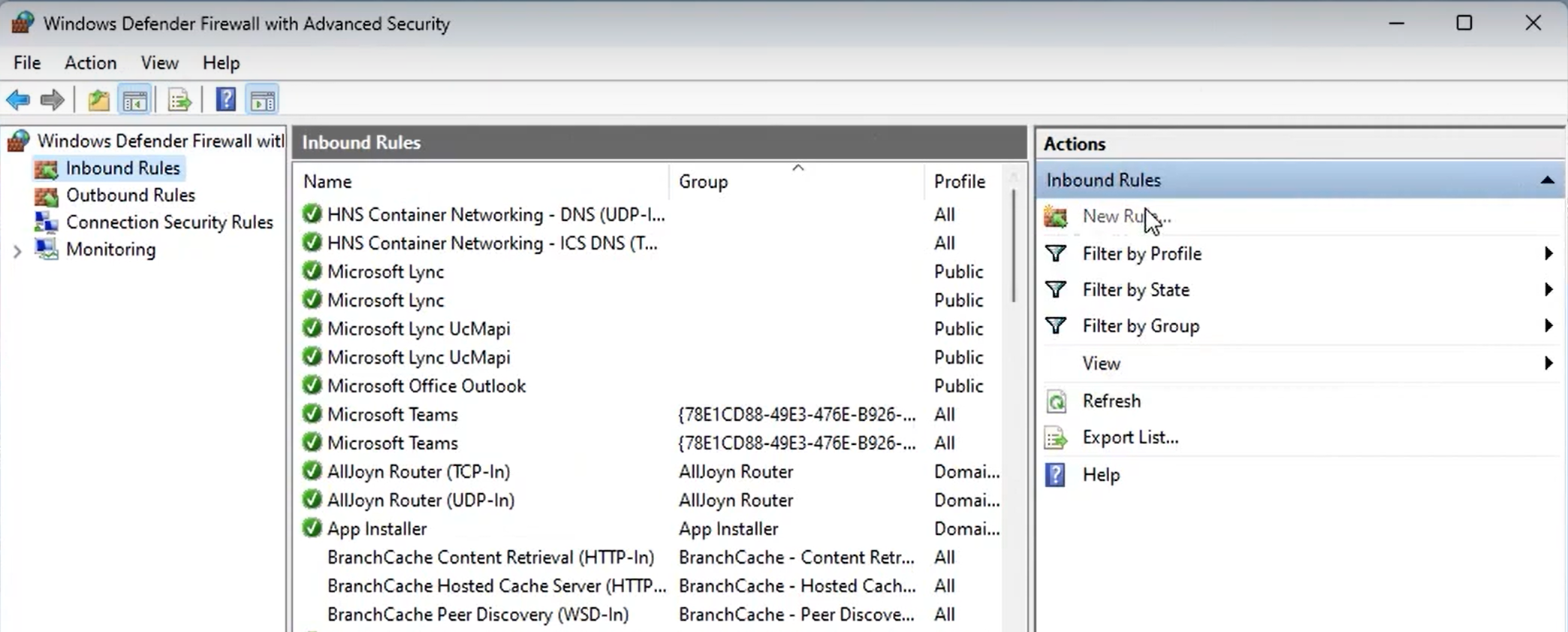

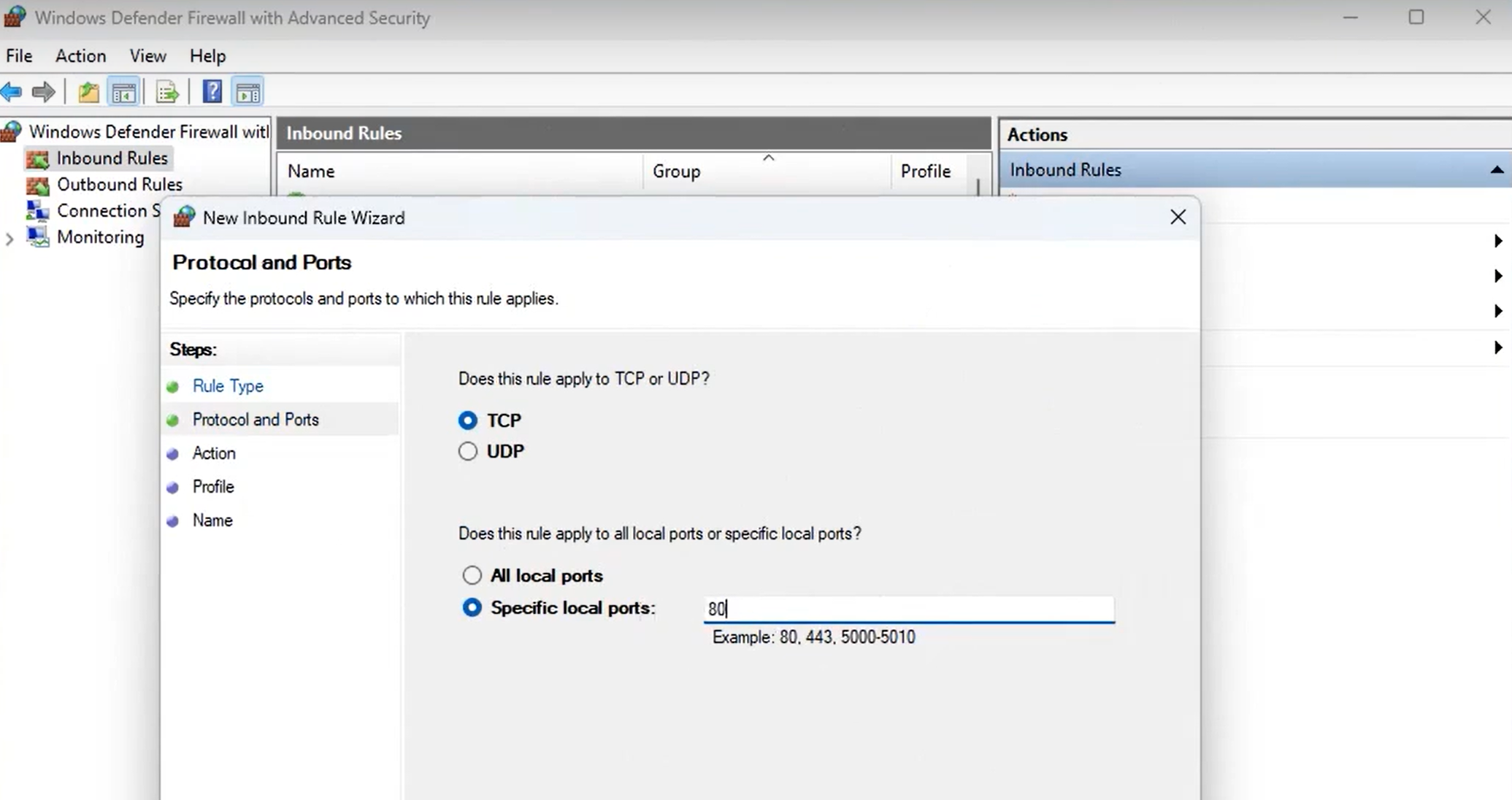

3. Enable the rule in Windows Defender Firewall. Open Windows Defender Firewall and allow connections on the port 11434.

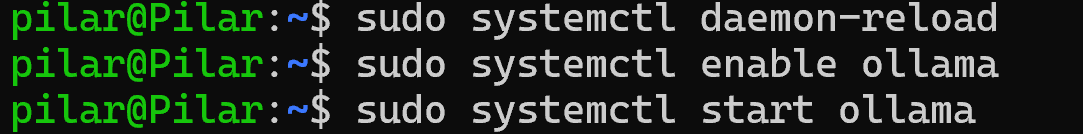

Step 5: Run Ollama

- Do not close WSL: Ensure that the WSL instance and Ollama service remain open.

2. Open in the browser from Windows, open a web browser and navigate to http://127.0.0.1:11434 to verify the service is accessible.

Step 6: Start CodeGPT in VSCode

- Install CodeGPT: Follow the instructions in this video tutorial to install CodeGPT in VSCode.

- Start CodeGPT: Open VSCode and run the CodeGPT extension.

Conclusion

You should now be able to test CodeGPT from other remote servers as long as you verify that the server where Ollama is running is accessible from localhost.

Following these steps, you can connect an Ollama service running in WSL with the CodeGPT extension in VSCode, ensuring a smooth and efficient integration.

Leave a Comment